Why the hell haven't we figured out the brain yet?

It seems like we've been at it for a while, and we certainly know a lot about the brain. But our understanding of how many basic cognitive functions are implemented in the brain is extremely vague.

Take decision-making, for example (the area which I studied for my PhD thesis). There are theories about what brain areas are involved and models of how neurons could implement "decisions", but there are fundamental questions we don't have the answers to, like how the brain represents and compares the value of different available options.

Similarly, we only have vague high-level understandings of even common brain disorders like autism.

The "Neuroscience 101" explanations of cognitive functions and disorders say where certain functions take place, but little about how. You get explanations like: "Language processing is done in the Broca's and Wernicke's areas", but that's an extremely shallow explanation of how the incredibly complex task of language generation or understanding works.

It's even unclear that we're right that the processing happens in such a localized area. Some studies suggest these language areas might just be "hubs" that integrate the distributed processing that occurs across the entire cortex. So not only do we not really understand what the processing is, we're not even really sure we've pinned down the where.

So what's stopping us from understanding the brain?

Understanding the brain is hard

Compare our understanding of the brain to our understanding of a computer. You can have the high-level Neuroscience 101 understanding of a computer—the hard drive is where long-term data is stored, the video card does graphics, the central processing unit processes the instructions of programs, etc. But if you want, you can go much deeper.

The basic unit at work in a computer is a transistor. With a transistor, there are three terminals. One input, one output, and one control. If the control is "ON", the input will flow to the output (like a switch in an electrical circuit—because that's literally what it is). Similarly, we know how to put transistors together to form logic gates, and how to put logic gates together to create an Arithmetic Logic Unit, and so on to create a whole computer. With enough time, you could understand a computer from the high-level software down to the transistors.

The analogous situation with a brain would be to know what every neuron is doing at every given time, and have the theoretical work done to map high level cognitive functions onto the functioning of specific groups of neurons in the brain.

It's not a huge surprise that understanding these high-level cognitive functions is still aspirational. We still don't fully understand the brain of tiny worms (C. Elegans) with only 302 neurons—approximately 0.00000035% of the 85 billion neurons in a human brain. It turns out that neurons themselves are much more complex than transistors and can perform sophisticated computation far above the "binary if-statement" logic a transistor can perform. And when you combine neurons into networks, the complexity compounds.

Not to mention one of the main functions of brains is that, unlike computers, they change their wiring by strengthening, weakening, pruning, and creating synaptic connections. And these changes are central to cognitive functions like memory and learning.

So when it gets to higher level cognitive functions, a big part of the problem is just the sheer complexity of what we're looking at. But that's not the only problem. We also lack the ability to get good data about them.

Getting brain data is hard

Our methods of getting neural data suck.

The tradeoffs you need to make when choosing a neural data collection method are between how much of the brain you can measure, the temporal resolution, the spatial resolution, and the invasiveness of the procedure.

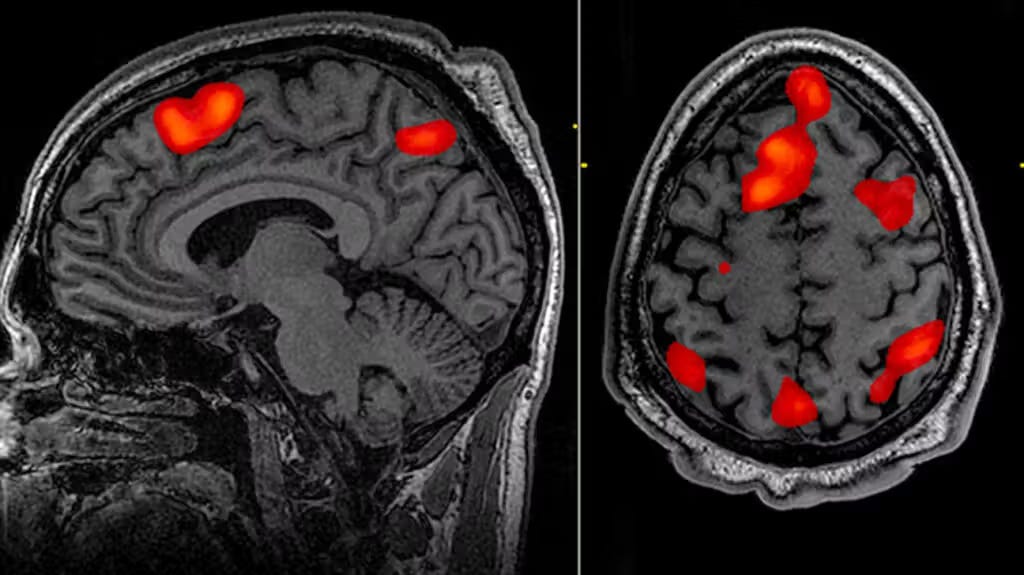

For example, fMRI is the standard method of measuring brain activity in humans. It has low invasiveness (no surgery required) and measures activity across the entire brain. But it has terrible temporal resolution (you get a data point over seconds, while neurons fire on the order of milliseconds) and terrible spatial resolution (an fMRI "voxel" typically contains millions of neurons).

I've mentioned before how using fMRI to study the brain is a bit like feeling around your computer to see what parts are getting warmer. There is information there, and it can be helpful, but it's a far-cry from giving you the information you need to fully understand what's going on.

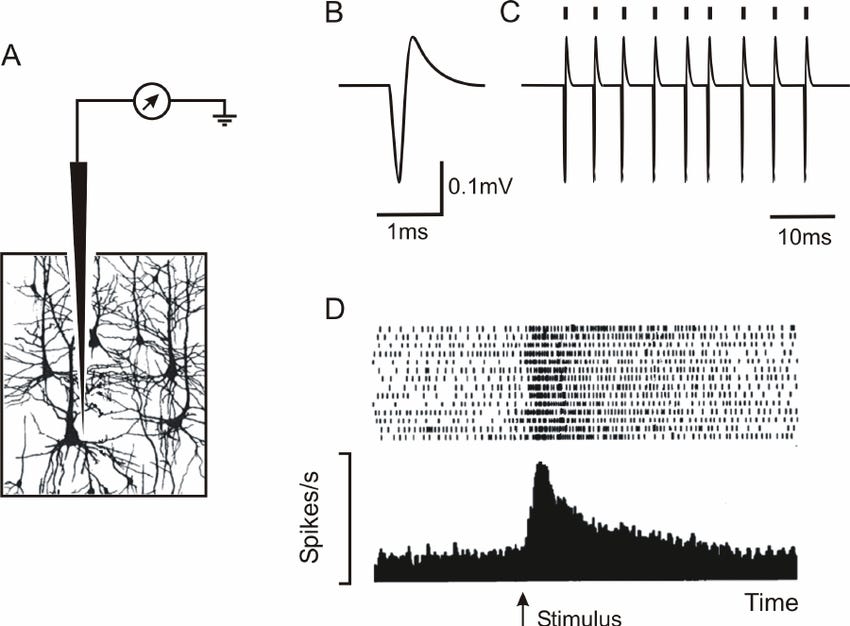

At the other extreme is single-unit recording. This involves putting electrodes close enough to neurons that you can record the electrical field of their action potentials. That means scientists need to place these electrodes physically into the brain. This is incredibly invasive, so it's only done in non-human animals or humans undergoing brain surgery. It also has really poor coverage, only allowing you to record a few neurons in one or two small areas of the brain at a time. But the spatial and temporal resolution are incredible—you can isolate the spiking of an individual neuron, and see the electrical waveform of the spike itself.

There are other methods that have various tradeoffs. EEG is like fMRI but with better temporal resolution and worse spatial resolution. Optical imaging can be done in some animal models but is extremely invasive (e.g. requires genetic manipulation of the brain cells and a camera placed that can physically visualize the cells). There's no silver bullet giving you perfect neural data.

So we're far from having the ideal data set where we know what every neuron is doing at any time in a human. But even if we had that magical data set, we would be far from understanding the brain, because…

Analyzing brain data is hard

Even if we had all the data you could want, our current analytical tools aren't up to the task.

In a fun paper, Eric Jonas and Konrad Kording generated data from an emulation of a microprocessor performing three "behaviors": playing the games Donkey Kong, Space Invaders, and Pitfall (the classic three functions of any computer processor). The idea was that they would have access to all the information a neuroscientist could want—the activity of all transistors, as well as the connections between them, during these behaviors. Then they could see what standard neuroscience analytical techniques would tell them about the (known) functioning of the microprocessor.

The results aren't promising for neuroscience.

What do you do with neural data once you have it? The obvious thing is to look at the neural/transistor activity and see how it correlates with an observable behavior. In neuroscience this is called looking for the tuning of the neurons.

Jonas and Konrad looked at relationships between transistors and the luminance of the last pixel the microprocessor would have shown on its connected display. They found transistors with apparent tuning to the luminance, but, since they knew how the microprocessor actually worked, they knew those transistors weren't actually related to the pixel display:

[T]heir apparent tuning is not really insightful about their role. In our case, it probably is related to differences across game stages.

In technical terms, there is some confounding factor (in this case, game stage) that is related to both pixel luminance and transistor activity. This might seem silly with video games, but it's related to a serious problem in neuroscience. When I used to do this kind of work, we were always worried that the apparent tuning we found was because of some other factor, like attention. Lots of factors of a behavioral task impact attention, and so a relationship between a behavioral task and neural activity might just be picking that up.

This is a complicated way of saying, correlation is not causation.

One way neuroscientists try to get at causation is through lesion experiments—in animals, you can literally destroy a neuron or brain area to see if it changes behavior.

Jonas and Konrad show the amusing result that many transistors in their microprocessor would only affect one of the three games. So there seem to be "Donkey Kong" transistors and "Space Invader" transistors. If you eliminated those transistors, only the "Donkey Kong" "behavior" would be impacted. This shows the causal relationship between the transistor and the behavior, but of course, it tells us very little about what that transistor is actually doing.

The issue is with testing a narrow range of highly complex "behaviors". These transistors weren't made for Donkey Kong specifically. It just so happens that, for whatever reason, Donkey Kong uses these transistors and the other two games don't. A wider range of tested behaviors, along with theories about the common computational functions required, would tell us more about what these transistors are doing.

So it isn't just the analytical tools that matter. If we had better behavioral experiments that matched the microprocessor's discrete functions better, then you could say more about what an individual component did if it broke that function. Better understanding of cognitive functions, informed by cognitive psychology, can help create tasks and theorize about the functions required by tasks, to guide the interpretation of lesion (or tuning) results.

It also points to the need to test across a wide range of behavioral tasks to make sure results generalize.

You can’t blindly apply these analytical techniques to data and expect understanding to pop out. There's something missing: theory.

Computational/theoretical neuroscience is all about using math and modeling to create models of how the brain might solve certain problems. These lead to more specific predictions about what specific neural circuits might do, and constrain the analyses to differentiate between different theories. The models can still be wrong, but they guide what relationships to look for in the data.

Science is hard

To sum up, neuroscience has two tough issues: it's hard to get good data, and it's hard to analyze the data in a way that tells you anything substantial.

But there's reason for optimism. Better data collection methods are constantly in the works, new behavioral tasks are being created, and theoretical neuroscientists are working on frameworks for wider ranges of neural computation.

But all of this is slow and iterative.

The brain is really, really complicated.

Part of it is that we are trying to study the most complicated objects we know of. Human brains have 86,000,000,000 neurons and 100,000,000,000,000 synaptic connections. The range of tasks they are capable of is the full range of human behaviors. We humans can do a lot of cool stuff. To do that, we need complicated brains.

But neuroscience isn't special in being slow. Science is hard and often requires a difficult mix of clever experiments and theoretical work to progress.

As told in Hasok Chang's Inventing Temperature, it took literally hundreds of years of iterative development for us to get a good theoretical handle and method of measuring heat. Creating discrete degrees in temperature took some of the world's greatest scientific minds grappling with surprisingly difficult conceptual problems, ultimately culminating in a theory of temperature and the ubiquity of well-calibrated thermometers we have today.

There's still a lot we don't understand about the brain. There's an enormous amount of work to be done. But we know a lot—our understanding of the visual system and the types of processing done are very sophisticated, if not complete. We'll create new analytical techniques, new tasks, new theories. Many of them will be dead-ends. But some of them will give us a bit of insight, and we'll continue to pull ourselves up by our bootstraps to understand the brain.

But it's going to be a while.

Please hit the ❤️ “Like” button below if you enjoyed this post, it helps others find this article.

If you’re a Substack writer and have been enjoying Cognitive Wonderland, consider adding it to your recommendations. I really appreciate the support.

Another awesome article. Thanks!

I'm curious for your perspective on recent advances in commercial neuroimaging tech, e.g.

connectomics systems for surgical assistance like o8t.com

Openwater's ultrasound based system that is now in pre-sale

Kernel's td-FNIRS wearable

the irony to me is that the comparison for anything new is the "clinical standard" of legacy rater survey tools that are 40-50 years old and incredibly subjective and variable.

A sober caution. Science is hard and this is going to take a while.

I'm curious if there are any promising new technologies on the horizon that might help with the precision, either temporal or spatial.