Mind Uploads Aren't Happening Anytime Soon

Mind uploads are bad science, good science fiction, and great philosophy

I love science fiction with mind scanning/emulating/uploading. A lot. It's far and away my favorite science fiction trope. An outsize portion of my favorite books feature mind uploads and the majority of my science fiction writings do as well.

But here's the thing: They're really implausible.

I wouldn't claim making a computer simulation of a mind/brain is theoretically impossible. I don't buy various philosophical arguments against them—e.g. that simulating a brain on a computer and expecting it to produce a mind/consciousness is like simulating a rainstorm and expecting to get wet. Without getting too far down the rabbit hole, if a simulated brain produced speech and action identical to what the original human would (whether in a simulated world or through a robotic body), it's hard for me to swallow that it wouldn't be conscious. I think mind uploading is a coherent concept and love them as a thought-provoking trope (more on that below).

Instead, my claim is that fiction dramatically understates how hard creating mind uploads would be.

Popular media often portrays mind uploads as a near-future technology. Rob Sawyer's Mindscan takes place in 2045. The recent series Upload takes place in 2033 (okay, it's a comedy so maybe I shouldn't expect realistic science, but still!). My claim is these are laughably optimistic timelines. Mind uploading would require fundamentally new techniques to be developed if it even turns out to be physically possible at all.

This Is Your Brain On The Walking Dead

I think unrealistic depictions of current technology drive this idea that mind uploading is not too far off. Take, for example, this scene from The Walking Dead (okay, I probably shouldn't expect rigor in a zombie show either):

In the scene, the MRI shows individual neurons, their interconnections, and their electrical activity. This level of detail is the kind of thing a neuroscientist would sell their soul for. It blows the most high-tech invasive technologies we have today out of the water by orders of magnitude. That The Walking Dead is supposed to take place in the modern day is just plain weird. The technology they are showing here is Star Trek level techno-magic.

(The other amusing mistake in this scene is the subject is shot with a gun in the MRI machine. MRIs use powerful magnets. Here is what would happen if you got near one with a gun)

If we had this level of imaging technology, it might not be crazy to think mind uploading wasn't far off. We would be in a state like what's portrayed in shows like Upload: We just need some powerful computers and smart software.

Modern brain scanning technology is a spectacular marvel of modern science, but they're eons from what's pictured above. The first thing to appreciate is just how tiny brain cells are and what a complicated tangled mess our brains are. It's estimated there are about 50,000 neurons in a cubic millimeter of human cortex. Each neuron has about 5,000 synaptic connections with nearby cells, branching out and connecting chaotically.

A recent herculean effort using an MRI got a spatial resolution of 250 μm3. That's a sixteenth of a cubed millimeter, which is incredible resolution, but that means there are still 3000 neurons in each voxel (a voxel is a three-dimensional pixel). For the purposes of mind uploading, it's like taking a picture of a colossal crowd where each pixel contains 3000 people and expecting to go from this still image to identifying each individual, what they're doing, and the nature of all their relationships with others in the picture, despite 3000 of them being represented in a single dot.

Maybe MRI will get better, but there are limits to how good it can get—more powerful magnets would be necessary, and there's a limit to how powerful a magnet can be before harming tissues. There are limits to how well we can correct for micro-movements caused by breathing, heartbeats, or cell movements themselves. MRI can't scale to the point of getting us the resolution we would need for a true brain scan, and MRI is our best current technology for scanning brains… at least, living brains.

Mapping the dead

There are ways to get a better picture of a brain than through MRI, but they all involve killing the subject and slicing the brain into very thin slices. Probably not something future mind uploading companies would put in their ads.

To give you a sense of the current state of technology, recently scientists mapped out a cubic millimeter of a mouse brain using electron microscopy. They picked out individual neurons and what other neurons they connect to. This was an enormous effort, involving hundreds of scientists and hundreds of millions of dollars. Doing this for a larger brain would be much more complicated—as you increase the area you're mapping, you have a larger number of neurons each might connect to, so you get a combinatorial explosion of connection complexity. However, it's not crazy to think that if we continue to scale this technology, there's no fundamental reason we wouldn't be able to make a similar map of an entire human brain in the future.

The problem with this is we still wouldn't have anything close to what we need to simulate a brain.

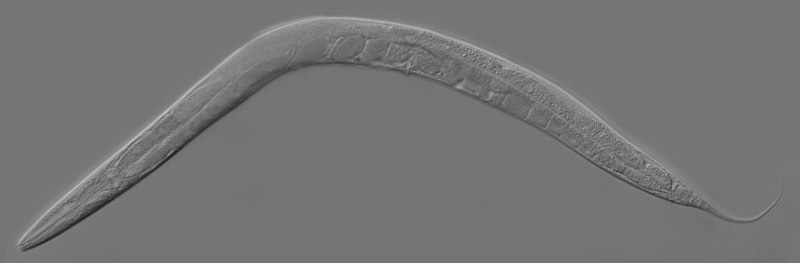

We have a full connectome (what they call a map of the brain, showing all connections between neurons) for C. elegans, a type of tiny worm. They only have 302 neurons, and the nervous system of each C. elegans is wired up the same way. They’ve been one of the most used model organisms in neuroscience and genetics for decades. We’ve enriched that connectome with additional information from literally thousands of studies looking at the same 302 neurons using the most invasive techniques we have, including many that are just not possible to use in humans. We know a lot about how their brain works, but even the most sophisticated models we have of their brains (e.g. OpenWorm) don’t cover the full range of the worm’s behavior. That's right, only 302 neurons fully mapped neurons that are the same in every subject and we can’t simulate these worm “brains”—compared to our 86,000,000,000 neurons that are wired differently in each person.

Why is this so hard to simulate their nervous system if we know how all the neurons are hooked up? Having the wiring diagram doesn't tell us about the dynamics of the system—we know which neurons can talk to which, but not what kind of messages they send or how the neuron's current state might modulate that information. It's like expecting a blueprint to give you a detailed picture of the business that goes on inside of a building—it might give you an idea of the constraints (no one is cooking meals in the broom closet), but it will not give you a rich enough picture to predict every event and interaction between individuals that will take place in the building.

So even if we scaled up our current technology to where we could get a full human connectome, the brain we mapped would be left sliced thinner than deli meat and you would eliminate the spark that makes the brain a person: neural activity.

Capturing Neural Activity

If a connectome isn't enough to simulate a brain, what would be?

One possibility is trying to simulate neurons from first principles. If we have a rich picture of neurons and understand the rules governing their behavior, we should in principle be able to simulate them.

There are two issues with this approach. First, we would require information much richer than we have from connectomes (like the neurotransmitter each synapse releases, neural types, glial cells, etc). Given that developing a human connectome, where we are dealing with relatively large neurons, is still a distant pipe dream, we're far off from being able to produce a brain map rich enough to theoretically do this (though for an interesting look at some options, there is a speculative roadmap that goes through different imaging techniques produced by the now-defunct Future of Humanity Institute).

Second, we would need a better understanding of the functioning of the low-level mechanisms of the brain. There are still plenty of papers in molecular neuroscience, where we're trying to understand how the tiny molecular mechanisms in the brain work. There is still active research on protein pumps, a fundamental and relatively well-known part of neurons. We know glial cells (the cells in the brain that support neurons) impact neural activity, but don't understand how very well. To go from our current high-level understanding of brains to a perfect simulation (not a toy simulation for research purposes) requires fundamental leaps in our understanding of low-level mechanisms in the brain. If you're waiting for a full theory of neuroscience to give you a rich enough picture to perfectly simulate all neurons from an image, I suspect you'll be waiting a long time.

Alternatively, you could try to record the activity of neurons (while the person whose brain they constitute is still alive) and treat the neurons as a black box. If we can predict under what circumstances a neuron will fire, maybe we can simulate the brain without understanding the low-level mechanisms. Note that we are ignoring a lot here, neurons are more complicated than black boxes that either fire or don't fire (for example, synapsis are plastic and change over time, some computation occurs in axons themselves, which modify the downstream signaling of neural activity, etc.), but let's brush all that aside.

Thinking back to the scene from The Walking Dead, you might think our ability to record neural activity is better than it is. If you're thinking of non-invasive techniques (i.e. you don't have to stick anything in your brain), you could try placing electrodes on the scalp (EEG). With EEG, you typically use 32 "channels"—32 electrodes placed on the scalp. Given there are 86,000,000,000 neurons in the brain, each electrode is blurring together the activity of many, many neurons. This isn't anywhere near the individual neural activity we need.

You could instead go back to MRI. Functional MRI is a technique for imaging the brain's activity instead of its anatomy. It has significantly worse resolution than MRI does when used for anatomy, instead being at the level of 3mm3, many times larger than the already-too-large 250 μm3 discussed above. It also doesn't directly track neural activity—the images you see of a brain "lighting up" are the BOLD (blood-oxygen-level-dependent) signal, a measure of oxygenation of the blood in different regions, which correlates with the level of activity in the area (with some time lag). Just like with EEG, this is nowhere near telling you which specific neurons are firing. It gives you at best a rough measure of how active a vast population of neurons are on average.

To get the data we need, we need to again look at invasive techniques. The best methods we have for recording the activity of individual neurons involve getting an electrode physically close to the cells themselves. Neuroscientists routinely stick individual electrodes (or bundles of them) into brain tissue, get them close to the neurons, and record the activity (I used to do this kind of research). Recording different neurons in isolation won't get you what you need—the activity of any neuron connected to this one might affect its activity, so if we want to predict what a neuron will do, we need a record of all neural activity together. In practice, recording a few neurons at a time is considered great, recording hundreds at a time isn't unheard of. But to somehow record the tens of billions in a human brain at once isn't possible.

To get electrodes near the deeper neurons, you would need to ram right through the neurons in tissue higher up. It isn't possible to get electrodes in deep without destroying other neurons. This is fine if you're just recording a small localized area (as they do routinely in neurosurgery), but if you want to record the whole brain, you're turning the higher areas of the brain into Swiss cheese. And the idea of being sure you've captured every single neuron (and can identify which one you're recording) is a pipe dream.

The only plausible way to get recordings of all neurons would be some kind of nanotechnology. At this point, we're just talking whacky sci-fi technology. Yes, I guess it might be possible to unleash an army of nanobots that are small enough to crawl into a skull, cozy up to neurons, and record the activity in some way that can later be put all together. They would need to hang out in the brain for a long time for us to have a wide enough picture of the neural dynamics to have any hope of simulation. We also would need to map the activity they record onto any connectome we later produce. Then there is the problem of actually having enough computational power and code to simulate a brain (and body for it to interact with).

At this point, we're leagues from technology we have line of sight to. We're back in Star Trek techno-magic land. Best to keep mind uploads as fun science fiction rather than realistic futurology.

Why Mind Uploads Make Great Science Fiction

Despite my diatribe above (which, if anything, understates the difficulties), I still love mind uploads. They are incredibly philosophical ideas forcing us to confront head-on what we believe makes us us.

If I laid down to have my mind scanned for an upload, what would it be like? If the flesh and blood "me" was destroyed in the process, it's pretty simple: I would feel like I laid down for the scan, and woke up in my simulated form.

But what if the original wasn't destroyed? Both the flesh-and-blood me and the computer program would feel like they were "me". Maybe you would favor treating the flesh and blood me as the real me, but why does it make sense to "demote" the simulated version just because the flesh-and-blood me survived? The copy would be the same data in either case, and the resulting mind would feel the same.

Splitting yourself like this leads to some uncomfortable questions about "Who would I be in these situations"? This is all a restatement of Parfit's Teletransport Paradox—where his conclusion is there is no fact of if two individuals (spread through time or otherwise) are the same. In other words, if your mind was copied to the cloud, both or neither can be considered "you"—there's no "right answer"; it's a matter of personal taste.

To put more of a bite into the scenario, we can think of situations where there would be real stakes: If you were going to die, but you could have your brain scanned, would you do it? What if you were scanned but you wouldn't die until an hour after the scan, would it still be "you" being uploaded? What if the gap was a day? A year? A second? A nanosecond?

I find these kinds of questions captivating. They challenge my intuitions and force me to rethink common assumptions about what makes a person a person. Playing with these ideas is a fun and challenging way to pose questions about what it is we value—if people are ends in themselves, what happens when we can copy them (or change them) at will?

Science fiction tropes like this give a way of exploring the implications, like philosophical thought experiments but with more "meat" in the narrative. Since thought experiments are about probing intuitions, having more textured stories can give more nuanced views on some of these questions.

And that's why I love the mind upload trope.

Think I’ve missed something? Have a different view on mind uploads? Share in the comments!

Hello, fellow neuroscientist here! (End of my 3rd year in a PhD program. I do developmental research)

No notes, this was a solid break down!

It is extremely comical to me when media misunderstands the absurd intricacies of what they're describing especially when it comes to the brain. Johnny Depp's Transcendence movie is coming to mind.

I look forward to seeing more of your stuff and interacting with you in the future!

The other thing stories usually miss is the amount of data involved and the time it takes to transfer that data even at very high speeds. The brain contains at least petabytes of data regarding neurons, synapses, the interconnection map, the strength of the interconnections, and probably a lot more (glial cells, for instance, may be important to cogitation).

As an example, suppose we're talking 30 petabytes and a blazing speed of one terabyte per second. That's 30,000 seconds, which is over eight hours. And that's at one terabyte per second.