This is the first in a series of loosely related posts I plan to write about the processes that shape what experiences we're exposed to. I'm giving the series the dramatic title of Reality Filters, which makes it sound like it could be a cool Space Opera series. Unfortunately no space ships or lasers here, though.

Why do some songs become chart toppers while others fall flat? Why do Stephen King’s books sell millions of copies while other talented authors are lucky to sell thousands? Why do some paintings/operas/symphonies/poems become enduring classics while others fade into obscurity?

Some reasons are straight-forward: some books and songs suck, so they’re never going to be famous. Some have an enormous marketing team behind them, so are sure to achieve some recognition. But is it luck or quality that determines how successful those at the top are?

Everything is Obvious by Duncan Watts is a book about how everything seems obvious in retrospect (classic hindsight bias), but in reality we’re terrible at predicting ahead of time. We’re good at creating post hoc explanations, but terrible at understanding true causality in complex systems.

One of the most interesting things brought up in the book is about the inherent unpredictability of social phenomena like what will be popular. Serendipitous events led the Mona Lisa to be so popular rather than other paintings of similar quality (a story that is detailed further in Sassoon’s Becoming Mona Lisa). More scientifically, he pointed to an experiment exploring the dynamics of what rises in popularity in different conditions.

The experiment involved 14,341 participants exposed to an artificial “music market” where they could download songs. The songs, taken from an assortment of bands from the early-2000’s equivalent of Soundcloud, were ones participants were unlikely to know. Amusingly, to validate that participants were unlikely to have heard these songs before, they shared the songs with two “experts” (a DJ at the college radio station and a music editor at a now defunct website). The experts agreed they had never heard these songs—confirming these bands were nobodies.

Equipped with a collection of unknown songs, the experimenters set up a website for the participants to listen to and download the songs from. Participants could listen to a song, then were asked to rate the song and asked if they would like to download it.

The trick is, the experimenters set up a few independent “worlds” and looked at download counts in each of these worlds. In the “independent” world, the website didn’t give participants information about what other participants were downloading. They simply had a list of songs to play/download and were making their decisions with no social influence, and the researchers could look at what got downloaded.

In the “weak social influence” condition, the list of songs included the number of times other users had downloaded the song. This acted as a signal to them about what songs other users had liked. The experimenters set up 8 different “worlds” where downloads of each world were independent from the others. This way, they could look across worlds and see what songs rose to the top in each, effectively rewinding and replaying history to see if the same songs rose to the top.

Finally, they set up the “strong social influence” condition, with another eight worlds, where participants had the download count and songs were sorted by the download count, giving more emphasis to the current popularity of the songs.

When they looked at the data, the researchers found the social influence conditions had greater inequality (meaning the differences in popularity between the more popular and less popular songs were larger). The level of inequality was higher in the strong social influence condition than in the weak social influence condition. This makes sense—the number of downloads a song has contains information about how good a song is, so participants should favor listening to those songs. As they are listened to more often, those popular songs are more likely to be downloaded, reinforcing the social signal. The stronger the social signal (by changing the order of the songs) the more powerful this feedback effect.

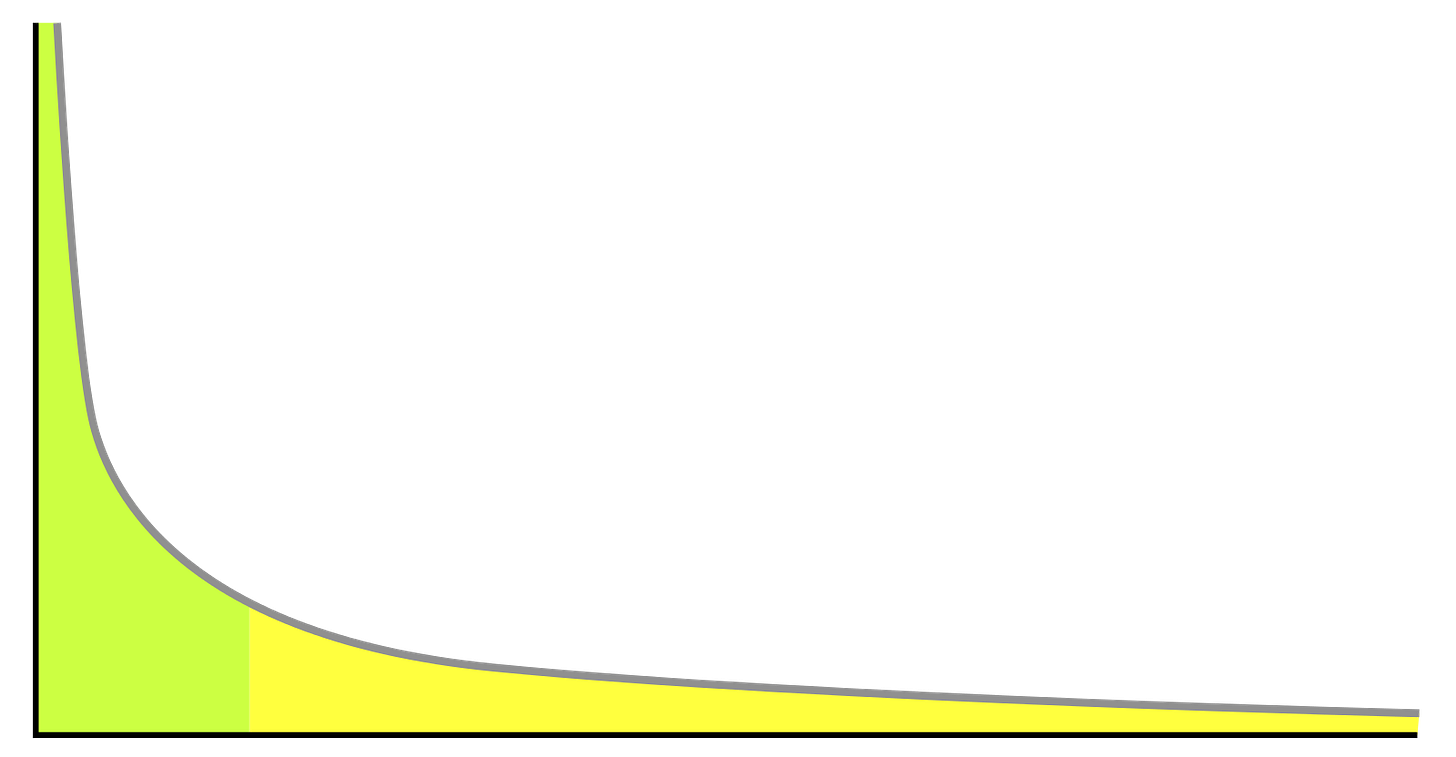

The impact of social influence on leading to greater inequality in popularity is a useful model for thinking about media consumption in a globalized world. We don’t sample media randomly. People share media the world over and we are more connected than ever through social media and recommendation algorithms. Artists who have seen success in the past have advertising budgets. Media of all types strongly follow a power law, meaning if you plotted media in terms of popularity, you get a hockey-stick plot—most things fall into a pretty similar range of low levels of popularity, then you have a sudden shot up of those lucky few that reached breakout success. There isn’t a lot of middle ground, popularity sits on a knife’s edge.

The other finding was that popularity was unpredictable. Different “worlds” in the social influence conditions allow us to see if the same songs always come out on top. They don’t. There is a relationship between the “quality” of the song (measured by how well it does in the independent condition) and its rank, but it’s far from deterministic. In the words of the researchers: “The best songs rarely did poorly, and the worst rarely did well, but any other result was possible”. This effect was stronger in the strong social influence condition.

This makes sense: If social influence is strong, the idiosyncratic tastes of the first few participants are going to have an outsized impact on the outcome. The songs they like will receive a boost and become more discoverable, while those they don’t like (or just happened to not listen to) are going to fall by the wayside. Each subsequent participant will be more likely to listen to the songs that have already seen some success, amplifying that signal.

No doubt things are messier in the real world. Songs don't exist in a vacuum. Artists make them, some of whom already have fanbases or connections. Artists live in different places, have different financial resources to draw on, and are going to have different happenstance encounters in the world. All of these will have different impacts at the artist and song level, and interact in a complex way such that even those artists or songs that seem to have everything "right" for a rise to fame are still subject to the serendipity that will make them stand out from a crowd of equally qualified options.

The songs that rose to the top in this experiment were high quality. In retrospect you could justify the popularity of any song by pointing to its quality. But why that high-quality song was more popular than another of equal (or perhaps greater) quality might be luck. We can come up with plausible post hoc explanations for why one rose to the top, but if we “rewound the tape” and played it again, something different would sit at the top—and we would have a different, perfectly reasonable explanation for why that was at the top. In other words, we can easily deceive ourselves into thinking we understand why something rose to the top, even though in reality chaotic happenstance played a large role.

These dynamics play into hindsight bias (seeing things as more predictable than they really are). Thinking about how these and similar processes determine what we're exposed to and how they play out to create cognitive biases (with a connection to machine/statistical learning concepts) will be the topic of the next post.

So true, and the writing rings clear as a bell. I am reminded of Malcolm Gladwell's ideas on how statistical randomness, or basically, luck, will sometimes skew the odds in our favor (or out of it).

Interesting thoughts. I find the science and mathematics heavy-tailed distributions to be illuminating across many domains of human experience. Perhaps, most obviously, wealth distributions, which exhibit similar effects to what you discussed.