Smart Mode, Dumb Mode, and Tools for Thinking

The 2 System Model of thinking, and some other thoughts on irrationality

Please hit the ❤️ “Like” button if you enjoy this post, it helps others find this article.

A popular way of thinking about our irrationality is System 1 vs System 2 thinking. This is the primary framing in Thinking, Fast and Slow by Daniel Kahneman, possibly the most popular book on the science of decision-making ever written.

The narrative goes that System 1 is a fast, reflexive system, while System 2 is slow and more effortful. Think about this question:

A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much is the ball?

If you answer $0.10, this is supposedly because of your System 1—it's a reflexive answer, by subtracting $1.00 off of the total cost.

But the real answer is $0.05, since if the ball costs $0.05, the bat costs $1.05, bringing the total to $1.10. This requires thinking more deeply through the problem, and therefore (the story goes) is System 2.

This example is often given as an intuitive demonstration of the 2 System Theory. Our System 1, full of heuristics, gets us the quick but incorrect answer, while thinking more deeply about it would be an effortful System 2 job.

But note that the question forces a dichotomy—it's a sort of trick question that has an easy yet wrong way of going about it, and a correct but more difficult way of going about it. This feature of the question forces a dichotomy, you either do it the right way or make the common mistake.

Most questions we face in real life aren't like this, and our decision-making processes are similarly not so easily categorized.

As one paper put it: "there is no strict dichotomy between heuristic and nonheuristic, as strategies can ignore more or less information."

We have different strategies for decision-making and some are quicker and less effortful than others, but there aren't just two modes of thought. There's a plethora of cognitive tools we use when making decisions.

There's also the question of when we decide to use different strategies. There's evidence that we use our confidence in our initial answer to determine whether we stick with a fast heuristic or revisit the problem with a different strategy. One model of how we make decisions (hat tip

) is that we use confidence as a guide for when to continue to consider more information, integrating more into the decision-making process until we hit a threshold of confidence.When we go into a bookstore looking for a book, we use a bunch of heuristics to pare down the possible options (maybe only considering a certain genre), and depending on how much time we have we might only consider books or authors we've heard of and read the backs, or choose a few with interesting titles to look more deeply at. Constraints like our time dictate what strategies we might use, and our confidence that we've found a book we'll enjoy might be the deciding factor on when we stop looking.

In the real world, constraints are all over the place.

Bounded Rationality

Of course, sometimes our decision-making strategies result in mistakes. Sometimes systematically so—resulting in a bias.

But the right way to think about biases isn't to throw up one's hands and say "lol we're dumb" (and then secretly think "lol other people are so dumb, but not me, I do System 2 thinking when appropriate").

There's a rich research literature on bounded rationality: a framework that takes into account the constraints on our cognitive systems. We're rational within some constraints. We have limits on the time we have to solve problems and the amount and types of information our cognitive systems can easily process. We therefore often try to find a satisfactory solution rather than an abstractly optimal one, like in the bookstore example: you don't search through every book because you're constrained by how much time you have (and your own boredom).

Deploying heuristics to speed things up is the rational thing to do. We have different information we can use and different strategies to assess that information, and depending on how quickly we need to decide or how confident we are based on the information we've seen so far, we may include more or less information.

Bounds on our time and capacity to consume information aren't our only constraints. We're also constrained by the biology of our cognitive system. For example, peak-end bias (our tendency to place more weight on the most pleasant/unpleasant part of an experience and on how pleasant/unpleasant the end of an experience was) is likely in part due to the limitations of our memory.

has argued that our tendency to frame options as gains or losses with regards to a reference point (leading to framing effects and other often-cited examples of human irrationality) is partially due to the constraints on the precision with which our brains can represent values.Contextualized Reasoning

It's not hard to find other problems that we're surprisingly bad at. Take the Wason Selection task:

You are shown a set of four cards placed on a table, each of which has a number on one side and a color on the other. The visible faces of the cards show 3, 8, blue and red. Which card(s) must you turn over in order to test that if a card shows an even number on one face, then its opposite face is blue?

Which cards would you flip over? Try before reading on.

Most people get this wrong—about 90% in the original study.

The right answer is you need to check the 8 and the red. The 8 needs to have blue on the other side, and the red cannot have an even number on the other side. The blue card can have an even or odd number, and the 3 can have whatever color it wants on the back, neither has a way to "break the rule".

But most people have trouble seeing these implications from the logical rule of "if a card shows an even number on one face, then its opposite face is blue". If you didn't, good for you (though I suspect it's because you were expecting a trick question, and/or are a weirdo with experience with abstract logical rules).

Now try the following:

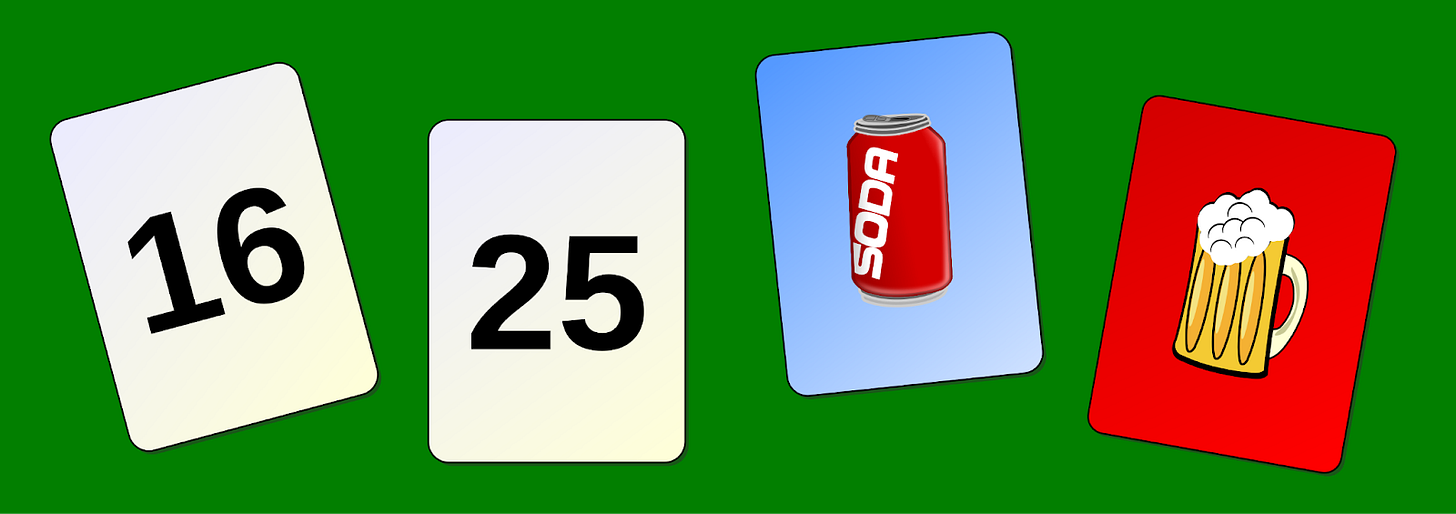

Each card has an age on one side and a drink on the other. Which card(s) must be turned over to test the idea that if you are drinking alcohol, then you must be over 18?

Regardless of if you got the original task right, you probably found this one easier. It's obvious—you need to check what the 16-year-old is drinking and check the age of the person drinking the beer.

The logical rule applied here is exactly the same, but somehow we find it much easier to apply it in this context. People perform much better on this version of the task. This is one example of a general trend: people perform much better on ecologically valid tasks (those more like what we would face in the real world) than abstract ones.

People often struggle with abstract probabilities, but perform better when those probabilities are translated into frequencies. "5 out of 20" is easier for most people to reason about than "25%".

When problems are framed similarly to how we might actually encounter them in real life, people do better. Which raises a (rhetorical) question:

What counts as human rationality: reasoning processes that embody content-independent formal theories, such as propositional logic, or reasoning processes that are well designed for solving important adaptive problems?

Not being able to solve an abstract logic problem (or a trick math word problem) isn't a demonstration of human irrationality. It might just be a sign of the irrelevance of the problems.

Logic and probability are useful tools for reasoning in certain contexts. But I think that's the way to think about them—as tools, not as the definitions of rationality.

Smart Mode and Dumb Mode versus Thinking Tools

I think people who read about the 2 System Theory of reasoning end up better informed than those that don't. We use heuristics and people are bad at certain kinds of reasoning. Don't worry, I'm human, I've noticed we do dumb stuff (not me though, of course).

But I want to suggest a different way of thinking about this. We don't just have a "smart mode" (System 2) and "dumb mode" (System 1). Instead, we have a collection of tools for thinking and deciding. Some are surprisingly complex tools we use with ease—like sophisticated models of other people to infer emotional states and routinely navigate incredibly complex social environments.

There are problems we encounter less frequently—interpreting probabilities or statistics, which many people only encounter when reading the news. Most people haven't invested the time and energy into acquiring and learning how to use the specialized tools for those tasks. Like many at-home projects, they just grab a screwdriver and duct tape and, eh, it's not a professional job but it's unimportant enough to be fine (and maybe, like many home-owners, we're even wildly overconfident in the results because we don't even know what a professional job would look like).

Some of us have lots of experience and training with abstract logic and probabilities. Heck, I have 8 years of experience as a data scientist—one of the interview questions we use to weed out people at the first stage is a Bayesian probability problem that people are famously bad at. People can learn to be good at this stuff, but it's like buying and learning to use a welding torch: maybe only worth it if you're planning to use that tool professionally, it doesn't make much sense for the typical homeowner.

Deciding when to apply a tool is a whole other task. You gain that knowledge by facing similar situations.

A student who has recently been doing a lot of algebra word problems is going to be more likely to recognize the baseball and bat price problem above as a math problem. It can be translated into:

a + b = 1.10

a - b = 1

They can apply the tools of algebra and readily get the correct answer.

For those of us who have been out of algebra class for a long time, that tool is likely sitting at the back of the shelf collecting dust. We don't recognize this context as one where we need that tool, because it's a really weird context, no one gives prices this way. It's no surprise that people who haven't recently been exposed to the context of algebra word problems don't recognize this as a place to apply algebra.

I suspect people with experience in abstract math, probability, and logic problems are more likely to end up reading about psychological biases (hint: it's because we're nerds). It's easy to think we're above the folks unable to solve abstract problems. But I think what's really going on is we just have more experience with the abstract context these problems are presented in and have been using those tools more recently. It's not that everyone else is being dumb, it's that it's mostly irrelevant to them.

But there's an issue with having a specialized tool: when you have a (fancy, specialized) hammer, everything looks like a (fancy, specialized) nail. Formal logic is a great tool, but when you get too stuck thinking in terms of propositional logic, it can make your thinking rigid. Analytic philosophers have a lot of training in formal reasoning, but one of the issues I run into when reading philosophy is how set they are on rigid, clean definitions. There are, of course, wonderful exceptions to this. But I sometimes wonder if having training with other reasoning tools like statistics would help them think in the fuzzy distributions that dominate so much of our world.

When we are given a problem to solve, we compare it to similar problems we've faced before and reach for the tool we used for that problem. If we happen to have a lot of experience with abstract logic and probabilities, maybe it'll be the right one. If not, it's not surprising we do something dumb.

Please hit the ❤️ “Like” button below if you enjoyed this post, it helps others find this article.

If you’re a Substack writer and have been enjoying Cognitive Wonderland, consider adding it to your recommendations. I really appreciate the support.

Thanks so much for this. I’ve been thinking a great deal about the growing divide between academic and nonacademic people lately, and this piece beautifully captures a difference in the way the two types might think that leaves adequate room for the rationality of nonacademics, who are often well equipped to think on their feet and solve immediate, real world problems. The recognition of this practical competence is important for nonacademics to maintain their societal agency as more academics suggest less educated people are increasingly unfit to make decisions regarding their own governance and welfare.

I, unsurprisingly, agree with this take. It's well done and does a nice job of rebutting the dichotomy of dual-process thinking. Oh, and I appreciate the shout out.