Vision As Scientific Inference

So you think you just look at stuff? Vision is actually a complex construction by the scientist in your skull

Each of us owns highly sophisticated instruments for detecting electromagnetic radiation in the wavelengths ranging from 380 nanometers to 700 nanometers. We keep them in our skulls and call them eyeballs. We like to designate the specific range of the electromagnetic spectrum they happen to detect as "visible light".

There's nothing inherently "visible" about these wavelengths—it's just what our sensors happen to be able to pick up.

If we were different creatures, like the mantis shrimp, whose eyes can detect the wavelengths from 300nm to 720nm, we would have wider bounds for what we consider the visible light spectrum. But don't feel too jealous of the extra range mantis shrimp have—we humans might not come with built-in sensors detecting that range, but we can build sensors that go far beyond that.

We use these built-in electromagnetic sensors quite a bit. Your extraocular muscles point your eyeball in different directions to capture radiation bouncing off different objects. The lens in your eyeball directs the radiation to your retina. The cells in your retina turn that radiation into an electrical signal sent on to the rest of your brain.

That might sound like an explanation for how vision works—light hits your eyes, gets converted into electrical signals, and those signals go to the brain. But just having raw measurements from our electromagnetic radiation sensors doesn't tell us what's out there. That needs to be inferred.

Contrast

Imagine you were stuck in your skull and had to figure out what's going on out in the world (in a sense, that's exactly the situation you are in, but I'm drawing your attention to that fact). You need to use the measurements taken by the various sensors our bodies come equipped with to determine what's out there.

When light hits the cells in our retinas, the cells respond, but there are millions of them and all their signaling is essentially saying "there's light over here!". Imagine having ~120 million people that just shout louder when more light is being shone on them and trying to figure out from that cacophony what image a giant projector is projecting on the crowd. I suspect you might find it a bit difficult.

If you want to make any sense out of the measurements your sensors are taking, you need to do some clever processing of those signals. And that's what our brain does.

The interesting stuff happens where there's a sharp change in light or color. Finding areas of high contrast is how we tell where one object ends and another begins.

We have neurons (retinal ganglion cells) that combine the light signals specifically to detect contrast. They take a group of the "Light here!" cells and fire most strongly when there's a difference between the middle of the group and the outer part of the group, indicating contrast (I'm simplifying quite a bit here).

Now, instead of having hundreds of millions of neurons just shouting "Light here!", we have a couple of million neurons shouting "Contrast here!". An improvement, but it's still going to be hard to sort out what image is being projected from all that noise. We're still far from being able to infer what's out there in the world.

From contrast to edge (and beyond)

Once those contrast signals leave the retina, your brain still needs to make sense of them. So the brain goes hunting for more patterns: lines, edges, and structure.

The brain again groups the signals together to look for a more informative pattern. It's not just a single spot of contrast that indicates something interesting—usually it's "edges", some continuous area of contrast, that shows where an object starts and ends. So in the early visual cortex, edge-detector neurons take the contrast signals and combine them in a line to look for contrast in a specific spot along a specific orientation.

With edge detectors covering every orientation and every spot in your visual field, we now have a bunch of signals indicating an edge of a specific orientation in a specific area.

This is progress, but still a noisy mess of edge signals with no clear meaning yet. So we add another layer, taking the edge signals and grouping some together to detect corners, curves, and other basic shapes. At each level, the brain is making educated guesses about structure—building a model of the world out of raw sensory differences. From those building blocks of edges, curves, and corners, it becomes easier to put the parts together to recognize everyday objects.

Contrast (haha) this with taking a picture with a camera. A camera doesn't need to identify specific shapes or objects. It's much more like that first layer of retina cells—it just conveys the light that was shining on each physical location of the camera (though "autofocus" features do look for contrast as a sign that the image is in focus). But that's the easy part—figuring out how to interpret what's in the picture is the complex bit. We invented cameras long before we invented software that could tell us what was in a picture. This XKCD comic about the difficulty of image classification from 2014:

Vision as Inference

There's a lot to vision I haven't mentioned—color, motion detection, parallax, top-down influences, and a million other important things. This isn't meant to be a textbook on the visual system.

The point I'm trying to get across is that what we see results from a complex inference. We are inferring, based on one thing (measurements from our electromagnetic radiation sensors), what is out there in the world. This inference requires a bunch of processing, it doesn't just come for free once you have the information.

The exact processing the early visual system performs is based on expectations—that contrast in color or brightness is where the interesting stuff is, for example. This is based on the regularities of our world—different objects tend to be different in color and brightness. Our visual system had to be shaped by evolution which had to learn this pattern that's now hardwired into our retinas. Interestingly, artificial neural networks trained to classify images end up hitting on the same solution—their early "layers" also detect edges in much the way the brain's early visual system does, before producing their inferences about what is in the picture.

Our inferences aren't just based on the raw signals and preconfigured processing. Our brains overlay additional assumptions—whether hardwired or learned—based on our understanding of the world. Our visual signals are often ambiguous. Maybe we caught a blur of motion caught out of the corner of our eye, we're looking through a fogged up windshield, or we're attempting to avoid stepping on toys while juggling a flailing child who keeps hitting us right in the electromagnetic radiation detectors.

Or there might just be inherent ambiguity in the data.

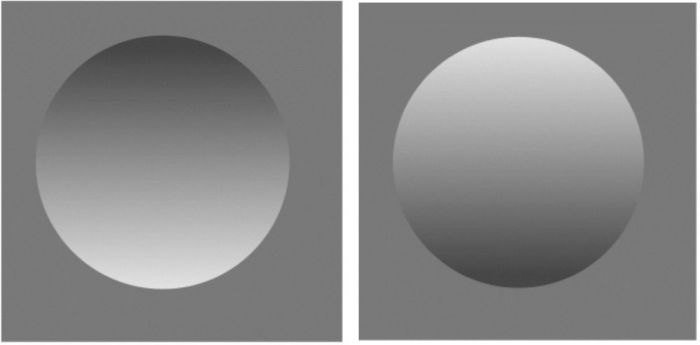

Take these pictures as an example:

These images are identical, except one is the flipped version of the other. If you're like most people, you see the left one as concave—it's an indentation, receding into the page. The one on the right looks convex—it's a bump coming out of the page.

There's no fact of the matter about these images—they're digitally created. But we infer different physical things about them. Why? Because most of the time, light comes from above. If light is shining from above, the bottom of a concave shape will be light while the top is in shadow, and vice-versa for a convex shape. Our visual system seamlessly takes this regularity into account and combines the expected light direction with the visual information to infer the 3-dimensional shape of these circles.

The point is, our brains don't passively receive what's out there in the world—we infer it. Our brain processes the visual signal in ways that assume a specific underlying structure, and we overlay additional higher-level expectations about the world (e.g. light is usually from above), to determine what we think is going on out there.

Scientific Inference

In a very real sense, we are stuck in our skulls, figuring out what's going on in the world from the measurements of various instruments. In that sense, what we're doing isn't unlike what scientists do to figure out other parts of our world.

Our everyday inferences about what's out there in the world happen quickly—when we enter a room, we instantly are making inferences about all of the objects in it. Scientific inferences are slower, but involve the same general process: take a measurement with an instrument, process that data to pull out the important patterns, combine with our theories/expectations to infer what is out there.

We use detectors in particle accelerators that detect specific forms of energy. Scientists combine measurements taken from the instruments with the expectations from theory to infer what's out there. When detecting exoplanets, we combine data from electromagnetic radiation sensors (not the eyeball kind) with theory to determine that there's a planet out there "transiting" across a distant star.

This is very different from how we think of our visual experience. We walk into a room and it feels like there's just a camera swung around that sends the exact captured image on to "us" (presumably some little person sitting somewhere in the brain—the homunculus fallacy). It feels like we have a direct connection to what's out there. But the way we sense the world is far weirder and more wonderful than that. Our visual world is a sophisticated construction—not because it's arbitrary and subjective, but because extracting information about the external world is a complex process we don't have direct visibility into (pun intended).

I find this juxtaposition between the simplicity of what we experience and the complexity of what's really going on pretty neato in the "makes me step back in breathless wonder at the universe and human experience" kind of way. Our experience is simple, the reality of what's going on is complex, but it's also understandable, which might be the coolest part of all. We can pull back the curtain on this thing that's such a constant, embedded, and intimate part of how we experience the world and break it down into comprehensible chunks. Hopefully doing so allows you to see things—including seeing itself—with firstness.

Please hit the ❤️ “Like” button below if you enjoyed this post, it helps others find this article.

If you’re a Substack writer and have been enjoying Cognitive Wonderland, consider adding it to your recommendations. I really appreciate the support.

Thanks for writing about vision. I like to think of vision as a creative process. As you point out, we perceive a linear spectrum that ranges from red to violet. However, we imagine a circular spectrum that links violet to red. This does not exist in the physical world. Likewise, we imagine a visual field without holes. Yet, one exists where our optic nerve forms at our "blind spot". I have an image of a strawberry pie in which the strawberries are themselves gray (equal contributing values red, blue, green), yet the strawberries appear to be red because their "whitish" background is teal colored - it is an example of color constancy.

I recently wrote on our subconscious ability to track 1-5 objects. It is a level of visual processing that comes after contrast and edges are detected. Find it at https://tomrearick.substack.com/p/visual-indexing.

Really nice piece! Part of it reminds me of Dennett’s "windowless control room".

The most important part is, I think, the comparison to science. This is the relevant way in which "even science must be experienced in consciousness" as the idealists persevere about. Although I am hesitant to say that "I" am locked in my skull - I there's no line to draw between the instruments and "me". I am the instruments too. The inference begins already in the retina. As I see it.

Anyway, thanks!