Can Mark Zuckerberg Read Your Thoughts?

Your mind might not be as private as you thought. Or maybe it is. IDK, I'm just a subtitle

Mind reading is a common trope in science fiction—in various fictional universes, you can hook someone up to some kind of brain scanner that can read the activity in their brains and tell you what they're thinking.

A related trope is the idea that some form of Brain Machine Interface could read the activity in our brains and allow us to control things just by thinking. Imagine being able to type with your mind! Well, this month, Meta released a paper purporting to do just that: "Brain-to-Text Decoding: A Non-invasive Approach via Typing". Whoa! We're in the future!

Should we expect to soon be interacting with Facebook (and the Metaverse, since I think they finally figured out how to program legs) using just our minds instead of our clumsy meat appendages? And will Mark Zuckerberg, or one of our other tech oligarchs, soon be able to read our innermost thoughts, finally vanquishing that last vestigial feeling of privacy we might have?

I'm going to throw some cold water on those ideas. But also throw some hot water on them! And maybe some lukewarm water, for good measure. There will just be a lot of water thrown on and around this whole mind reading topic. Grab a towel and don't panic.

Reading keystrokes from brains

First things first: What did Meta do?

They had a bunch of people type sentences on a keyboard while their brain activity was recorded. Then the researchers trained a machine learning model. They showed it examples of the patterns of brain activity that went with different key presses so the model could learn the relationship between the two. The model then could attempt to decode new examples, giving its best guess at what key the person was pressing based on their neural activity.

They could do this! Sort of. The researchers say they achieved an accuracy of 80% "for the best participants". So they got 1 out of every 5 characters wrong. But that isn't bad—it's actually right around how accurate people are on a mobile device keyboard. Standard autocorrect algorithms can correct most of those errors and reproduce much of the "typing" perfectly. So if the performance was this good, we might be able to type with our minds!

But… that was the best participants. The average was lower, at 68%. That's still not bad! Not great and would probably lead to frustration if you tried to use it instead of a keyboard. But surely as the technology is refined, that will increase, and Meta could design a product to handle an error rate higher than what you get on a mobile device. So should we get ready to throw out our keyboards? Absolutely not.

The main issue is that to get this level of accuracy, they had to use MEG. An MEG is an enormous, expensive machine that uses incredibly powerful magnets to measure the magnetic fields in the brain (a proxy for neural activity). They cost about $2,000,000 each and can only detect the brain's magnetic waves if they are in a room shielded from all other sources of electromagnetic fields, including the Earth's. So if you have the millions, a room set aside to become a Faraday cage, and want a mediocre brain typing experience, Meta's got you covered.

The paper reports what their accuracy would have been using MEG's little brother, EEG, which measures the electrical activity from the scalp using electrodes. EEGs are small and cheap, and there are plenty of companies making EEGs as a consumer product that wouldn't be unreasonable to have in your household.

So how does Meta do with EEG? The accuracy drops from 68% to 33%. Good luck "typing" your Facebook password with that.

Beyond keystrokes

The Meta paper came out alongside a companion paper that uses the same data for a scientific question: How does the brain produce language? Specifically, how does it transform a sentence into (in this case) specific key presses on a keyboard?

They looked at the representation of the sentence being typed at different levels: the semantics of the phrase, the word, the syllable, and finally the letter. They found there's a sort of cascade of the brain initially representing the highest levels of the hierarchy, and those representations stick around as the lower level representations form.

That's cool, but… how the heck do they know a phrase is being represented in the brain?

The answer is they got a semantic representation of the phrase using a large language model, which can get you an encoding of a phrase, basically a series of numbers that represent the meaning of language (I talk about these kinds of encodings here). Then you look for statistical relationships between the encoding and the brain activity. They do the same thing with words and syllables—get an encoding, and look for relationships.

They don't talk about how accurately you could decode the phrase, word, or syllable from the neural activity. Given that, it's safe to assume they couldn't "read out" these linguistic features from the brain signals with any meaningful accuracy. Seeing that there is a relationship between the neural activity and a phrase is very different from being able to decode the exact word or phrase. There are a lot more words and phrases than there are letters, so you would expect it to be much harder to decode a phrase than to decode a keystroke.

So even if you bought a giant MEG, put it in your electromagnetically insulated room, and sent all your data to Meta, they wouldn't be able to read out, for example, your inner monologue. They can just read out, with some reasonable accuracy, what motor actions you're doing, assuming you're doing something fairly repetitive like typing on a keyboard.

Is that it, then? Is this as far as we've gotten with mindreading? No. Time for some hot water.

Enter my (academic) grandaddy

In his 2017 Ted Talk, Jack Gallant declared:

[I]n the lifetimes of young people here there will be a brain decoding method that is cheap, portable, very powerful and that will become ubiquitous. People will be able to just wear a brain hat around and have their internal thoughts decoded all the time.

Now, people say a lot of dumb shit in Ted Talks, so how seriously should we take this? Is Jack Gallant just some crackpot? I hope not. He was the PhD advisor of my PhD advisor (making him my academic grandfather—yes this is a real term people use). He's published an enormous amount of influential neuroscience research. I like to think he's credible.

What makes Gallant speak so confidently about this?

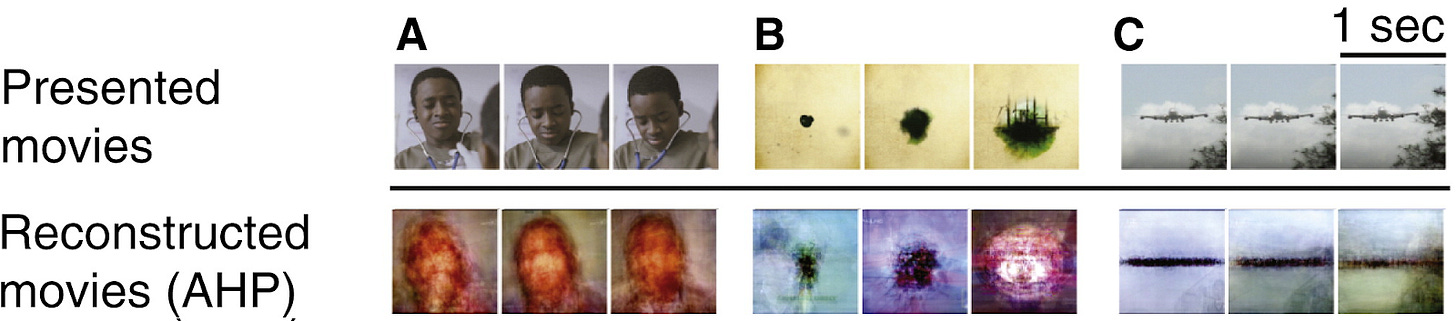

In 2011, Gallant published a paper decoding the movies people were watching from their neural activity. The videos are kind of eerie to watch—they aren't perfect, but clearly capture a blurry picture of what's going on in the scene being viewed (you can see them in the video included in the paper's Supplemental Information).

This was followed up in a 2016 paper where they mapped out the semantic representations across the brain by recording brain activity while people listened to narrative stories. They didn't do any decoding in that paper, but they dropped this nugget in:

One interesting possibility is that semantic areas identified here represent the same semantic domains during conscious thought. This suggests that the contents of thought, or internal speech, might be decoded using these voxel-wise models.

Multiple teams have continued this work, using advances in generative AI. They've built models that use the recorded neural activity as a representation fed into an AI image generator to reconstruct what people are looking at.

Here are some examples—the "Stimulus" is the picture shown to the subject in the scanner, while the other three columns are images generated by three different models based only on the neural activity.

Researchers have taken a similar approach with language, using generative AI to re-generate the text based on neural activity. Just like the pictures above, it isn't a 100% accurate reproduction, but it gets in the ballpark of what the stimulus was:

So, great, seems like scientists can already do fuzzy mind-reading. It's just a matter of time before we get even better mind-reading, right? Time for some luke-warm water.

My luke-warm take

When Jack Gallant declared there will be devices that can read our minds, he was betting that neuroimaging techniques would dramatically improve. His research and the other papers discussed in the previous section all use functional MRI. Like MEG, MRIs are big, expensive machines that use powerful magnets. It isn't a technology that's likely to ever have a small at-home model—the magnetic fields required are extremely dangerous. I used to have to literally wave participants down with a metal detector before they were allowed in the MRI room.

Having good methods of imaging neural activity is the biggest constraint on our ability to decode thoughts. If we had something small and cheap that did what MEG does, I suspect we would already have "mind typing" products.

But even our big, expensive neuroimaging techniques kind of suck. They all get a pretty blurry picture of neural activity in the brain, only getting an approximation of the activity of enormous aggregates of at least hundreds of thousands of neurons.

For mind reading to get significantly better, we would need better neuroimaging. There are advances happening all the time—functional ultrasound imaging is a fairly recent technique, and incremental improvements in MRI techniques happen frequently. But there's no clear breakthrough of the sort we would need to expect a cheap and high-resolution at-home model anytime soon. Despite many limitations, functional MRI is still the gold standard for functional neuroimaging 35 years after the first studies using it.

We might expect the technology will just get better. Jack Gallant seems to think so, so this isn't a naive take. But there might just be physical limits to how good we can get at neuroimaging. Keeping the thing we're imaging alive is a big constraint on the kinds of technology we can use, and making the imager small and portable is another big one.

What about the evil scientists that don't care about their mind reader being small and portable? More realistically, should we expect the military to start using neuroimaging as an interrogation technique (assuming they aren't already)?

One of the cool results from the Tang et al. paper is that they showed how performance degrades if the participant doesn't cooperate. If the participant was thinking about a different cognitive task, the decoding didn't work.

So you would need to get someone to sit still in a big scanner and think the thoughts you want to read out. If you wanted a proper name, that's going to be even harder, since the semantic maps are for broad conceptual categories, not specific people. So you might need them to do something really silly like imagine typing out each letter of the name. At that point, if they're that cooperative, you could probably just ask them.

Not to say brain imaging can't or won't be used for interrogation. Just that it isn't some magic that will allow you to pull any information out of a brain against someone's will.

Dystopia or Cool Tech?

So, umm, what should we think of all of this? We're not going to see mind readers next month, but they're not that far-fetched (unlike other sci-fi tropes, like mind uploading). This raises obvious concerns around privacy.

But this technology isn't completely dystopian: most of the research in this area is done to increase the accessibility of technology to those with various disabilities—a mind-keyboard could be a game changer for many who are unable to use a typical keyboard. The privacy issues might be manageable with regulations. Being able to input our thoughts directly into a machine might open the doors to super cool technology. Think of the video games we could play when unconstrained by the confines of controllers, keyboards, and mice!

Note for posterity: This article was typed mechanically with the author's physical hands in the year 2025. In accordance with any future Neural Transparency Act, I submit this statement in lieu of the mandated recording of my neural activity: No thought crimes were committed in the creation of this document.

Please hit the ❤️ “Like” button below if you enjoyed this post, it helps others find this article.

Know anyone who might like this article? Share it with them!

It is very cool. I still hate the possibility of it

This is super cool! Keep writing this amazing work!