The Salmon of Neuroimaging Doubt

We shouldn't throw the baby out with the bathwater (or the brain scanning out with the multiple corrections)

Please hit the ❤️ “Like” button at the top or bottom of this article if you enjoy it. It helps others find it.

A little over 15 years ago, some researchers put a salmon into an fMRI scanner. The researchers showed a series of images on a screen of (human) individuals in different social situations and asked the salmon to determine what emotion the individual in the photo was experiencing while the fMRI continuously scanned the salmon's brain.

In analyzing the data, the researchers found a tiny portion of the already-tiny salmon brain "lit up", showing a statistically significant relationship between the scanner's measurements and the task. Have we somehow massively underestimated fish? Was this salmon following the researchers' instructions and considering the emotions in the photos?

Probably not. For one thing, the salmon was quite dead at the time of the experiment. This, sadly, wasn't a study showing some amazing feats of previously unknown fishy cognitive abilities.

The study authors were actually making a methodological point:

Can we conclude from this data that the salmon is engaging in the perspective-taking task? Certainly not. What we can determine is that random noise in the [signal] may yield spurious results

In other words, the random fluctuations in the measurements the scanner takes can sometimes produce false positives, making it seem like there is a relationship between the brain and task when there really isn't.

Does this mean fMRI is fatally flawed? Certain critics seem to think so. For example, here's Erik Hoel's summary of the situation:

[E]ver since researchers in the mid-aughts threw a dead salmon into an fMRI machine and got statistically significant results, it’s been well-known that neuroimaging, the working core of modern neuroscience, has all sorts of problems.

Gosh darn, that sounds bad. Critics like Hoel seem to think this study was a groundbreaking discovery exposing the dirty underbelly of what a failure modern neuroscience is. Amid concerns about the replication crisis, this could make you think this is another example of science gone off the rails.

Reports of fMRI's death have been greatly exaggerated

Sorry for the double-fake-out here, but the salmon study not only doesn't show amazing fishy cognitive abilities, it also doesn't show some fatal flaw in fMRI.

The study was just the researchers' way of making an amusing point about an important but already well-known statistical issue: multiple comparisons.

Statistical tests are built on probability. Measurements tend to be noisy—they have random fluctuations. Statistics allows us to look across all the noise and make statements about how likely two factors are related, or if any seeming relationship is likely due to chance. When we say something is "statistically significant", we're just saying it's unlikely to have happened by chance.

Since statistical tests are probabilistic, if you do a bunch of them, some will show a "statistically significant" relationship due to chance. They're false positives. The more tests you do, the more likely you are to get some false positives, as illustrated by this XKCD comic:

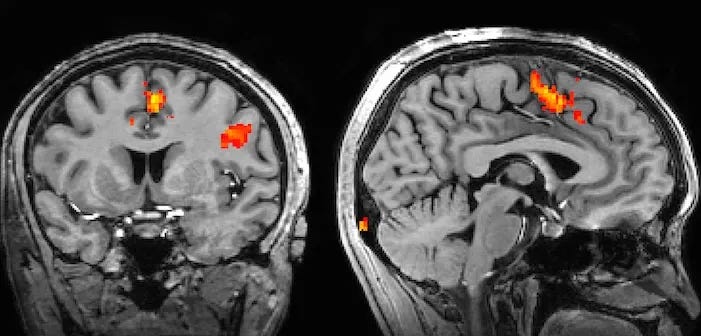

You might be wondering what multiple comparisons have to do with brains lighting up. The secret to those fancy colorful brain images you see in science news articles is that each "voxel" (the three-dimensional version of a pixel) that gets a color is representing a statistical test. If a voxel "lights up", that means it passed statistical significance.

But that means if you are analyzing fMRI data, you are potentially performing hundreds of thousands of statistical tests! You're bound to get some false positives, and have a brain seemingly light up like a Christmas tree regardless of what's actually going on in there.

Are scientists complete morons that haven't recognized this obvious issue with statistical tests on data like fMRI data? Is there a cabal of statisticians conspiring to keep the fishy truth from getting out?

Of course not. The boring reality is there are standard methods for correcting for multiple comparisons—if you do two comparisons, you should be more strict about your threshold for considering something "statistically significant", and there's straightforward math telling you what the new threshold should be to keep your chance of getting false positives the same as if you were performing a single test.

The salmon study was just showing that fMRI analysis requires you to use these methods. The authors are very clear that, when using either of the two most common corrections for statistical significance, they no longer saw the salmon brain "light up" (emphasis added):

[R]andom noise in the [signal] may yield spurious results if multiple comparisons are not controlled for. [Methods for correcting for multiple comparisons] are widely available in all major fMRI analysis packages.

Far from making the case that fMRI is fatally flawed, the authors were making an amusing illustration of why using the proper statistical tools to analyze fMRI is important—and pointing out how easy it is to do the analysis properly. It was a minor in-the-weeds statistical point already known to most researchers that got widespread attention because of the amusing way it was done, not because it was a study that had dramatic implications for the field.

It's possible to have too much skepticism

I love pointing out flaws in crappy methodology. I am, after all, a skeptic, and am well aware of the replication crisis and how theoretical and methodological issues have led to it.

But critics like Hoel pointing to this study as an indication of a deep flaw in fMRI is just downright misleading.

I'm no fMRI fanboy. I've written about how limited fMRI (and our methods of recording brain activity more generally) are. I've pointed out that the brain imaging shown in many popular media depictions is more akin to Star Trek techno-magic than the modern day reality. I've likened using fMRI to understand the brain to trying to understand your computer's inner workings by feeling what parts get warm while you use it.

All that said, I think it's easy to become too pessimistic or focus on every weakness of any methodology and miss the forest for the trees. fMRI has shown a lot of success! We've learned a lot about where different functions are performed, tested different theories of what cognitive functions are performed, and shown how distributed neural representations are. We also have been able to do cool things like decode images and sentences from fMRI measures of brain activity, effectively doing a fuzzy sort of mind reading, clearly indicating these methods are capturing rich information about what's going on in the brain.

Findings from fMRI regularly feed into studies using other methods in neuroscience. Each method, including fMRI, is flawed. But together, they help us triangulate a better understanding of the brain and cognition.

Skeptics like myself are keen on the phrase "If you open your mind too much, your brain will fall out". But sometimes, I think it's worth reminding ourselves that it's also possible to close your mind so much that your brain will suffocate (which is bad, since what fMRI measures is the oxygen flowing through your blood to your brain—so maybe if your brain was suffocated, fMRI really wouldn't work). But I guess not closing your mind too much is what the original "open your mind" phrase was about, maybe I didn't need to invent a new phrase to invert the skeptical one that was an inversion of the original. Oh well. It's done.

(Thanks to

for the question that inspired this post)Please hit the ❤️ “Like” button below if you enjoyed this post, it helps others find this article.

If you’re a Substack writer and have been enjoying Cognitive Wonderland, consider adding it to your recommendations. I really appreciate the support.

This is one of my favourite papers.

Up there with the parachute paper.

I enjoy seeing what must be completely unique sentences in the English language being born. “I'm no fMRI fanboy” just scored a record of entry. Congratulations.